Research: Blended Reality

INTRODUCTION

The HP Blended Reality Grant draws upon CCAM’s role in connecting the arts and sciences through experimental technology and collaborative research.

Since 2016, the grant has supported a range of faculty and student led research at Yale.

Current Research Themes

The Machine as a Mind

While machine learning and artificial intelligence are often described in terms of science fiction narratives, they are not... Read more...

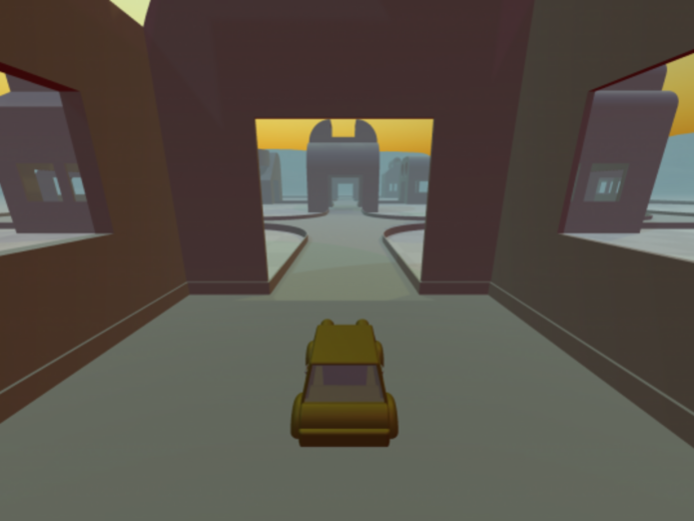

Emergent Play

Play is one the primary means by which we learn to navigate the world around us, it is the way that we poke and prod at... Read more...

Spatial Gestures

With new media such as AR and VR we are no longer working with two dimensional abstractions, when we model a building we can... Read more...

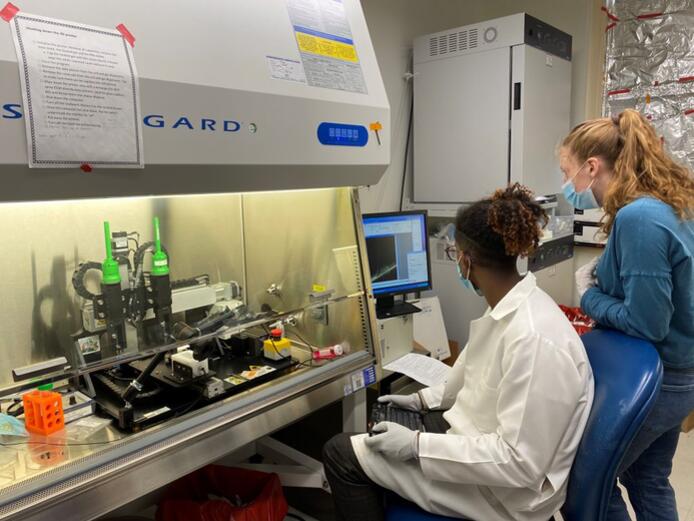

(Re) Constructing Industry

Industry in a global economy is vast and highly distributed. At the same time, that large scale requires every element to... Read more...

Digitizing the Material World

It has been said that when we change the way that we see the world, the world that we see changes. Using new technologies... Read more...

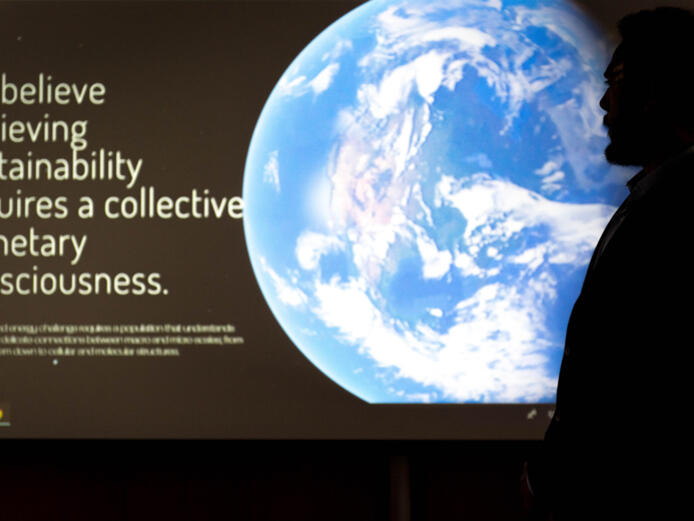

The Environment

A saying that we reference often at the Blended Reality Laboratory is that when you change the way you see the world the world... Read more...